Rohit Kumar is responsible for Researchwire European & North American business. He is an HEC, Paris 2008 MBA and Indian Institute of Technology (IIT) Kharagpur 2002 engineering graduate with close to 17 years of experience in business innovation and strategy, business development and client relationship roles. He is working with ResearchWire since 2016 and as a partner, is responsible for the growth of ResearchWire in Europe and North America.

Prior to ResearchWire, Rohit was handling sales strategy and pricing for Syniverse Technologies, a telecom services provider, where he was instrumental for growth in EMEA and India through product innovations, creative pricing and discounting models and executing strategic sales plans.

Rohit is based out of Luxembourg and has been living in Europe for last 12 years. He understands European & North American customers and their needs and ensures that our customers always have a local person to reach out whenever needed.

Is Neuromorphic computing an answer to travel from science fiction to reality? Can the neuromorphic semiconductors-powered computers build human-like machine architectures? Why are companies like Intel investing in Neuromorphic computing aggressively?

Scientists are fascinated with the limitless possibilities of Artificial Intelligence (AI) sparking countless speculations about the capabilities of this transformative technology. Neuromorphic computing is an emerging field of computing that is based on the principles of neural networks and aims to develop hardware and software systems that mimic the architecture and function of the human brain.

What are the implications of neuromorphic computing for the development of artificial intelligence, and how might these technologies be used to create more advanced AI systems? This blog aims to explain how software and hardware systems are developed that can function like a human brain, their possible use cases, technological challenges, what makes them attractive for future computing, and companies that are investing in Neuromorphic computing technologies.

How Neuromorphic Computing Works

Neuromorphic computing, a concept introduced in the 1980s and inspired by the human brain and neural network principles, has recently gained prominence due to advancements in Artificial Intelligence.

Modern computers are now being designed with suitable hardware to support this technology, as traditional computers backed by CPUs lack this capability.

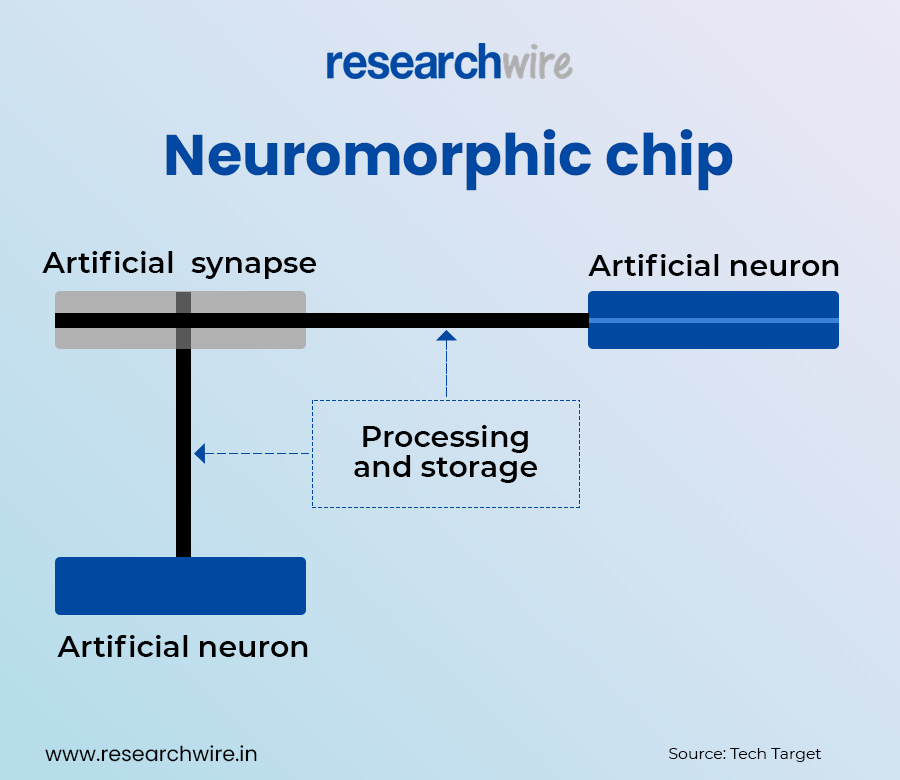

The human brain utilizes electronic circuits that mimic the functions of neurons and synapses, which are fundamental constituents of the brain. Scientists in the field of neuromorphic computing design circuits that incorporate both digital and analog components to emulate the way biological neurons and synapses interact with one another.

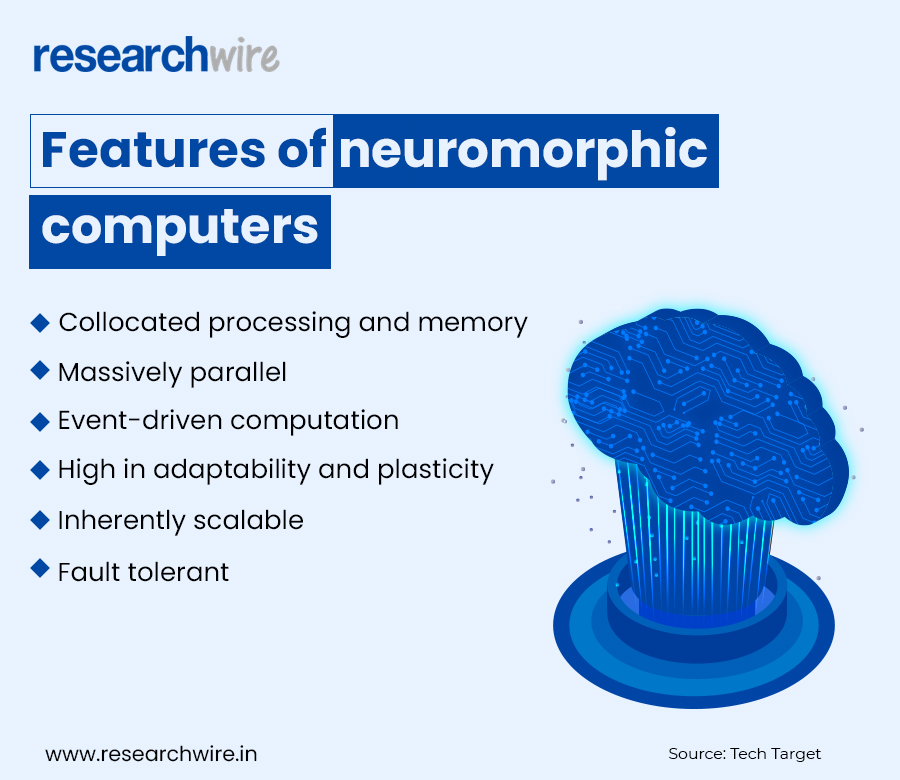

Neuromorphic computers employ a distinct high-performance computing architecture and mechanism that sets them apart from the conventional von Neumann computers, which are typically used in modern computer hardware.

Spiking neural network (SNN) is the prevalent type of neuromorphic hardware, where nodes, referred to as spiking neurons, are responsible for handling and storing data in a similar manner like biological neurons.

How is NC solving today’s problems: Potential Applications

Fuelled by high funding, Neuromorphic computing is predicted to revolutionize AI computing by allowing AI algorithms to run at the edge instead of the cloud due to their smaller size and low power consumption. With the ability to adapt to its environment, neuromorphic hardware running AI infrastructure is expected to provide various potential applications such as driverless cars, drones, robots, smart home devices, natural language, speech and image processing, data analytics, and process optimization.

Neuromorphic computing has a long history, and it has seen significant breakthroughs since its inception in the 1980s. It is a highly funded field that holds potential for the future of AI. Neuromorphic computing research takes either a computational or neuroscience approach to advance AI.

AGI, or AI exhibiting human-level intelligence, is the goal of AI research, and neuromorphic computing has the potential to help achieve it. However, there are debates about the ethical and legal issues surrounding sentient machines.

Comparing Traditional Artificial Intelligence and Neuromorphic Computing

Traditional AI (Artificial Intelligence) and Neuromorphic computing are two different approaches to building intelligent systems. AI is a broad term that encompasses various techniques and methods for creating intelligent machines, including machine learning, deep learning, and natural language processing.

Neuromorphic computing is a subfield of AI that aims to design computer systems inspired by the structure and function of the human brain.

- One of the main differences between traditional AI and neuromorphic computing is the underlying architecture. Traditional AI algorithms are designed to run on conventional computer systems, which use a von Neumann architecture. This architecture separates the processing and memory components of a computer, resulting in a bottleneck that limits the speed and efficiency of AI algorithms. Neuromorphic computing, on the other hand, uses a non-von Neumann architecture that is designed to mimic the parallel and distributed processing of the human brain.

- Another key difference between traditional AI and neuromorphic computing is the way they learn. Traditional AI algorithms rely on large amounts of labelled data to learn from, while neuromorphic computing is designed to learn from unsupervised data. This makes neuromorphic computing more efficient and adaptable, as it can learn from new data in real-time without requiring retraining.

In summary, while traditional AI and neuromorphic computing are both related to building intelligent machines, they differ in their underlying architecture and learning mechanisms. Traditional AI is more focused on algorithms that can run on conventional computer systems, while neuromorphic computing is focused on designing computer systems that more closely mimic the structure and function of the human brain.

Companies working in Neuromorphic Computing (INTEL)

According to published research reports, the Neuromorphic computing market is expected to expand at a compound annual growth rate (CAGR) of 89.1% and achieve a value of USD 550,593 thousand by 2026. This growth is driven by the demand for higher performing integrated circuits (ICs), the rising need for artificial intelligence and machine learning, and an increase in cross-industry partnerships.

Major market players, such as Intel Corporation and IBM Corporation, are in North America. Government support and investment activities are the main growth drivers for the expanding market of neuromorphic chips in this region.

Intel is expanding its portfolio of AI technologies, including Xeon CPUs, Movidius computer vision chips, MobileEye chips for autonomous driving, and Deep Neural Network training and inference processing technology from the newly acquired Habana Labs. Despite having all these offerings, Intel is still pursuing Neuromorphic computing, which mimics the neuron-spiking functions of biological nervous systems.

Intel Labs recently held a press and analyst event to shed more light on the company’s work in this area, including a new server for researchers called Pohoiki Springs. This 5U server has 768 Loihi chips and 100 million neurons that consume 500 watts and provide roughly the neural capacity of the brain of a small mammal. Intel Labs and Cornell University showcased the new system with an interesting application that leverages these neuromorphic chips to “smell” and detect hazardous substances quickly and accurately in the presence of noisy data. Loihi processes information up to 1,000 times faster and 10,000 more efficiently than traditional processors, making it a powerful tool for solving problems that are currently beyond our grasp.

Future Predictions of Neuromorphic Computing

The widespread use and increasing popularity of AI, machine learning, neural networks, and deep neural network architectures in consumer and enterprise technology have contributed to recent advancements in neuromorphic research. Another factor is the anticipated end of Moore’s Law, which many IT experts predict will occur soon. With the end of traditional architectures in sight, chip manufacturers are turning to neuromorphic computing as a potential solution to achieve greater efficiency.

Recent research has focused on developing new hardware, such as microcombs, to power neuromorphic computing systems. Microcombs are neuromorphic devices capable of generating or measuring highly precise colour frequencies, enabling neuromorphic processors to perform up to 10 trillion operations per second. These processors hold great potential for detecting light from remote planets and analysing exhaled breath to diagnose diseases at an early stage.

Major chip manufacturers, including IBM and Intel, as well as the United States military, are showing a keen interest in neuromorphic computing’s potential to improve the efficiency of autonomous devices like driverless cars and drones. Neuromorphic technology can enhance the learning capabilities of these devices, making them smarter and more efficient.

Limitations of Neuromorphic Computing technology

Neuromorphic computing has the potential to transform the capabilities of AI and advance our understanding of cognition but faces limitations. Underdeveloped software algorithms, limited accuracy, lack of standardized benchmarks, limited accessibility for non-experts, and current constraints based on our understanding of human cognition are some of them. Possible solutions include incorporating technologies from other fields such as probabilistic and quantum computing.

Conclusion

Neuromorphic computing is predicted to revolutionize AI computing by allowing AI algorithms to run at the edge instead of the cloud due to their smaller size and low power consumption. If you are using Neuromorphic computing-backed technologies as part of your intellectual property endeavours, then you are best advised to file your patent application to protect your innovation. Get in touch with our IP experts at Researchwire to check if you can patent your Neuromorphic computing-driven innovations.